If you don't understand how Google's crawl budget works, you could be missing out on valuable knowledge for your site.

The crawl budget affects SEO and Google rankings, which means it impacts how likely it is that searchers will find your site.

Struggling to understand how it all fits together? I'll break it down for you in this step-by-step guide. Keep reading to find out how to optimize Google's crawl budget for your business.

What is the Crawl Budget?

Google defined the crawl budget as combining the crawl rate limit of a site with the crawl demand of that site's URLs.

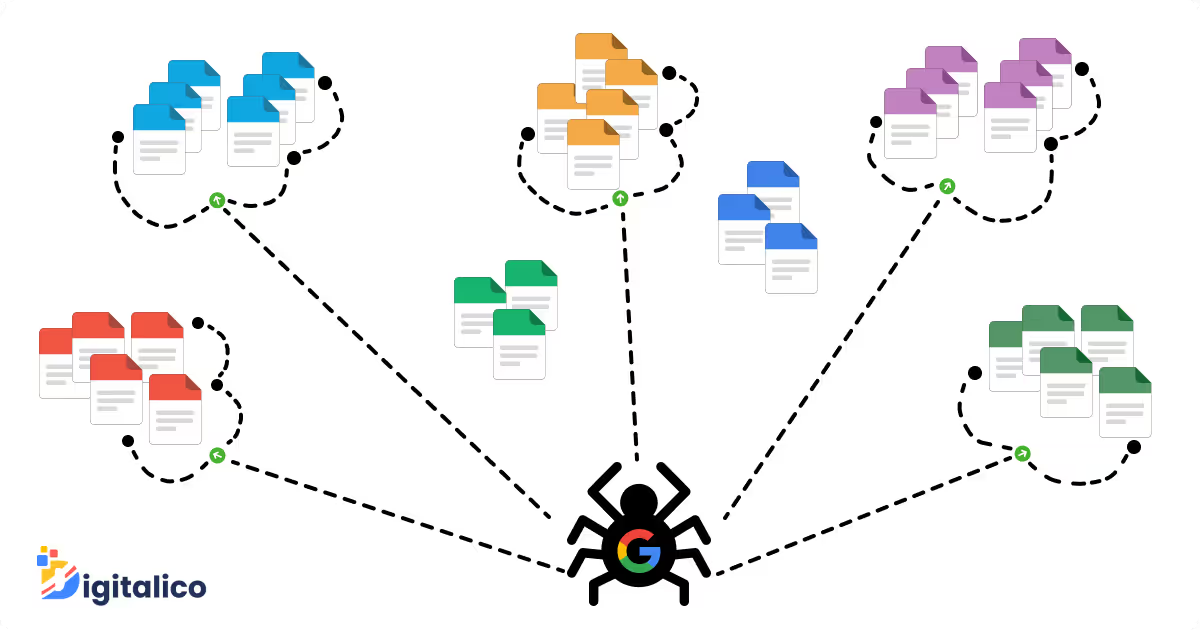

Web pages, or URLs, are crawled by a system called Googlebot.

For smaller sites, a crawl budget is generally not an issue. On these sites, new pages are typically crawled on the day that they go up.

When the site has under one thousand pages to crawl, Googlebot can get through them all pretty easily.

However, for a bigger site, Googlebot has to prioritize the way it will crawl the pages.

The server of a website can only give so many resources for crawling the site before it starts to interfere with a user's experience.

The crawl rate limit defines how much crawling can happen before it starts to affect the quality of a website user's experience.

The crawl rate limit doesn't always stay the same - it might go up if the site is responding fast or down if the site slows down.

It's also possible for the site's owner to manually set a crawl rate limit.

Meanwhile, the crawl demand of a site is another thing that affects Googlebot's activity.

When a web page is very popular online, such as a viral post, Google will crawl that page more often.

Googlebot wants to keep pages freshly crawled.

If a website moves or makes other major changes, Google may also crawl each page to make sure the pages are correctly indexed with the right URLs.

When you combine the crawl rate limit and crawl demand, you end up with the crawl budget, or how many URLs Googlebot will both be able to and want to crawl.

When your site has a high crawl limit and high demand, the budget is not a problem.

However, when the limit and the demand don't match up well, your budget becomes an issue. With these 20 steps, you can raise your crawl budget whenever you need to.

20 Steps to Optimizing Google's Crawl Budget

1. Check Server Logs for Errors

You need to make sure that your pages don't have errors that are inhibiting Googlebot's crawl process.

There are only two return codes that are acceptable when your pages are crawled.

The codes 301 ("go here instead") and 200 ("ok") work for Googlebot. Any other return code will hinder the crawl process.

To find out what codes your site is giving Google, you'll need to check your server logs.

Using Google Analytics won't work here, as analytics don't track any pages unless they have a return code of 200.

Use your site's server logs to discover and fix any errors.

There's an easy way to do this - just find any URL that doesn't have the correct return code.

Then, rank those pages in order of how often they come up so you know which ones are causing the biggest problems.

To fix those URLs, you might have to redirect them or change some code.

2. Check Google Search Console for Errors

If your server logs don't find all the errors, you can also use the Google Search Console.

One easy way to do this is by using a plugin that lets you find the errors when you connect your site to the Search Console.

3. Block Unnecessary Sections

Maybe there are some sections of your site that Google doesn't need.

You can use robots.txt to block those sections - but only if you're absolutely certain they won't be needed.

For example, if you operate a large online store, your site might have multiple ways to filter products by color, size, and so on.

Each different filter setting can add a new URL that Google will be able to find.

However, there's no need to let Google crawl each URL in this case.

4. Gain Backlinks

Getting links from other reputable websites is always a good tactic for your site, including when you need to optimize your crawl budget.

Build links by connecting with industry influencers, building your social media reputation, and using great PR strategies.

Link building won't boost your crawl budget overnight, but it will go a long way toward getting your budget where you need it to be over time.

5. Avoid Accelerated Mobile Pages

Accelerated Mobile Pages, or AMP, are important for making your site more compatible with mobile devices.

AMPs, however, give you separate URLs for each page.

This means Google has to do twice as much crawling, so your budget needs to be higher.

If you're already working to build up your budget, don't start working with AMPs just yet.

6. Avoid Rich Media Files

Googlebot is now able to crawl Flash, HTML, or Javascript pages (in the past, it couldn't).

However, there are still some rich media files that can trip Google up, like Silverlight.

Even if Google can crawl those pages, not all other search engines can.

This can inhibit the rankings of those pages, so where possible, avoid heavy use of rich media files - or even avoid them completely.

7. Give Text Versions Where Needed

You may not always want to or be able to avoid rich media files completely.

If you have pages with a lot of rich media files, make sure you also provide text versions of those pages.

Otherwise, Google will find those pages hard to crawl.

8. Eliminate Broken Links

Broken links will hinder Googlebot's ability to explore your website and get the information it needs.

Although broken links aren't a major factor in web ranking, they can still go some way toward negatively impacting your budget, as well as user experience.

9. Combine CSS Files

The more streamlined your code is, the easier Googlebot can navigate your site.

Combine your CSS files and take other actions to streamline your site's code.

10. Update Your Sitemap

Keep your sitemap updated regularly, which includes decluttering to keep your site running smoothly.

If you have blocked pages, needless redirects, and 400-level pages clogging up your site, this can get in the way of Googlebot doing its job.

Online auditing tools can help you clean up your sitemap, so you don't need to do it from scratch.

This work will also give your users a more positive experience on your site by reducing dead ends and redirects that keep them from browsing easily.

11. Set Dynamic URL Parameters

Your site might use dynamic URLs, so different URLs will still take users to the same web page.

However, Googlebot will treat all of those URLs as different pages, no matter where they end up.

On the Google Search Console, select "Crawl > Search Parameters."

There, you can inform Googlebot of any URL parameters that change the URL but not the page itself.

12. Use Feeds to Your Advantage

RSS or XML feeds are great ways for users to sign up to get content from your site - even when they're not on it.

Feeds are ways for web users to subscribe to updates from sites they want to follow.

They'll get an alert every time you put up a new post they want to see, and in turn, you will gain more attention from Googlebot (as well as attract more readers).

13. Organize Internal Links

A high-quality internal link strategy can go a long way for your budget.

Search bots will be able to find your site more easily if you have a nicely maintained internal link system, even though these links don't tend to affect crawl rates directly.

14. Boost SEO

As you have probably noticed, a lot of these budget optimization strategies are also SEO-building strategies.

Having a well-maintained site with great SEO makes it easier for Google and search bots to find and navigate.

Follow good SEO practices, and you will add to your budget.

Related reading: 10 Affordable SEO Tips to Improve Your Ranking

15. Reduce or Compress Images

A clean, simple website that operates smoothly is helpful to both Google and your users.

Images are important for most websites. However, large images slow down the speed at which your site can be crawled - or viewed.

Try reducing image size and compressing them if that doesn't work.

16. Stay Away from the Infinite

This may sound ridiculous, but it is possible for your site to have places where the links go on indefinitely.

For example, if you have a calendar with a button to view "Next Month," a user could conceivably click through that calendar indefinitely.

Users won't actually do this, but Googlebot might, which will hinder it from reading the actual pages on your site.

Get rid of those infinite links and keep only those that are useful.

17. Erase Duplicate Content

You probably already know that duplicate content can hurt your Google rankings.

It can also affect your budget when it comes to getting crawled.

Find and eliminate any duplicate content to keep Googlebot happy.

18. Market Your Content

As mentioned above, Google is more likely to crawl pages that have achieved popularity.

Use a good content marketing strategy to help get your content out there, and you'll get more of the right kind of attention from Googlebot.

19. Change Old Content

You can also update your old content to get it crawled again.

When you make changes to old pages, Google will usually do another crawl to make sure it has the information it needs.

20. Write New Content

The final content-related strategy is another SEO strategy: write new content.

Keep Googlebot crawling by providing fresh content for it to crawl regularly. This will boost your SEO and keep readers interested, as well!

Final Thoughts

Understanding the crawl budget is actually simpler than it may sound at first.

Boosting your budget uses a lot of the same tactics as boosting your SEO.

Using good site practices all around will help keep your budget where it needs to be and also keep users happy.

As you can see, there are many different ways to grow that budget effectively.

With these 20 steps, you can keep Googlebot crawling your site on the regular.

Have you tried any other budget-boosting strategies? Leave a comment and let me know!

.avif)